flink-flink学习之旅(二)

推荐 原创目前flink中的资源管理主要是使用的hadoop圈里的yarn,故此需要先搭建hadoop环境并启动yarn和hdfs,由于看到的教程都是集群版,现实是只有1台机器,故此都是使用这台机器安装。

1.下载对应hadoop安装包

https://dlcdn.apache.org/hadoop/common/hadoop-3.3.5/hadoop-3.3.5.tar.gz

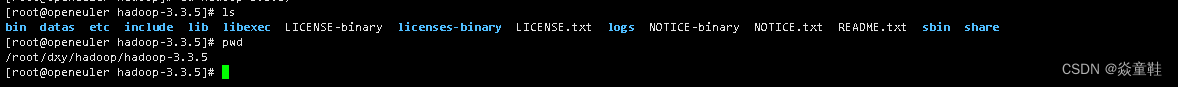

2.解压到指定路径比如这里我选择的如下:

3.修改hadoop相关配置

3.修改hadoop相关配置

cd /root/dxy/hadoop/hadoop-3.3.5/etc/hadoop

vi core-site.xml 核心配置文件

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 指定NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://10.26.141.203:8020</value>

</property>

<!-- hadoop数据的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/root/dxy/hadoop/hadoop-3.3.5/datas</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

</configuration>vi hdfs-site.xml 修改hdfs配置文件

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 指定NameNode的外部web访问地址 -->

<property>

<name>dfs.namenode.http-address</name>

<value>10.26.141.203:9870</value>

</property>

<!-- 指定secondary NameNode的外部web访问地址 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>10.26.141.203:9868</value>

</property>

</configuration>

vi yarn-site.xml 修改yarn配置

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<!--指定MR走shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--指定ResourceManager的地址-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>10.26.141.203</value>

</property>

<!--环境变量的继承-->

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

~ vim mapred-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 指定MapReduce程序运行在Yarn上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>vi workers 填上 服务器ip 如:

10.26.141.203

修改环境变量:

export HADOOP_HOME=/root/dxy/hadoop/hadoop-3.3.5

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HADOOP_CLASSPATH=`hadoop classpath`

source /etc/profile

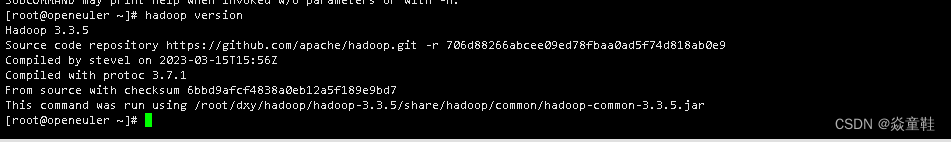

完成后可以执行hadoop version 查看是否配置成功如下:

hdfs第一次启动时需要格式化

hdfs namenode -format

启动yarn和hdfs

sbin/start-dfs.sh

sbin/start-yarn.sh

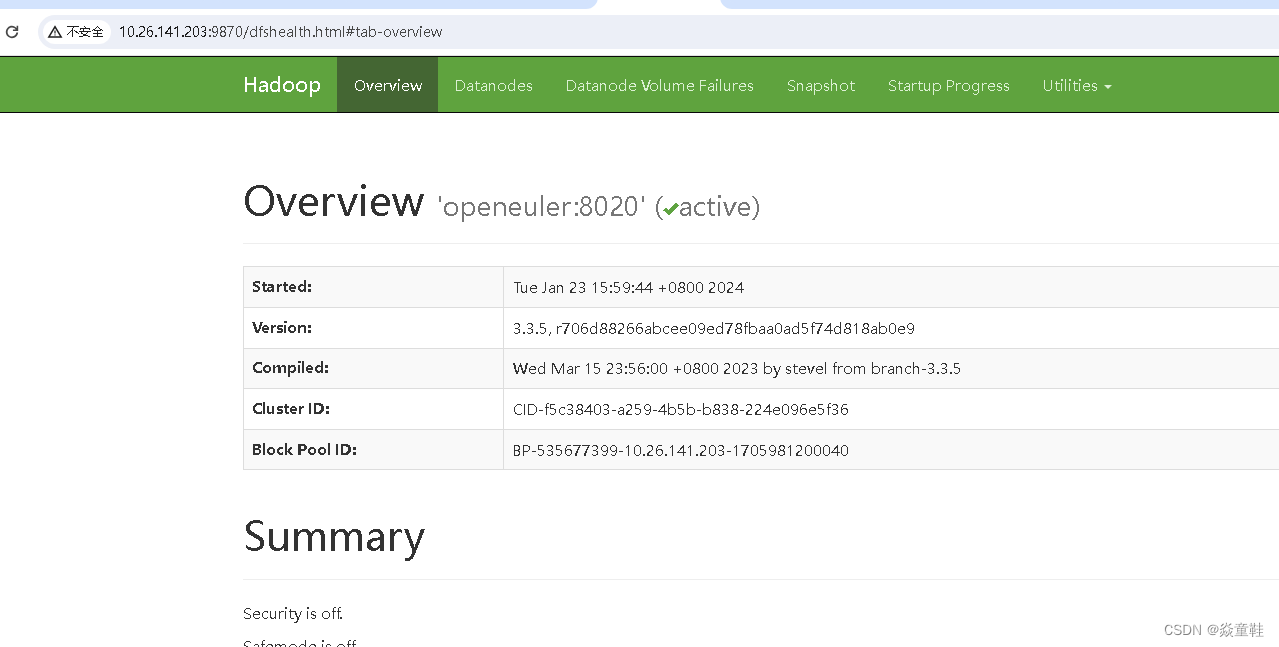

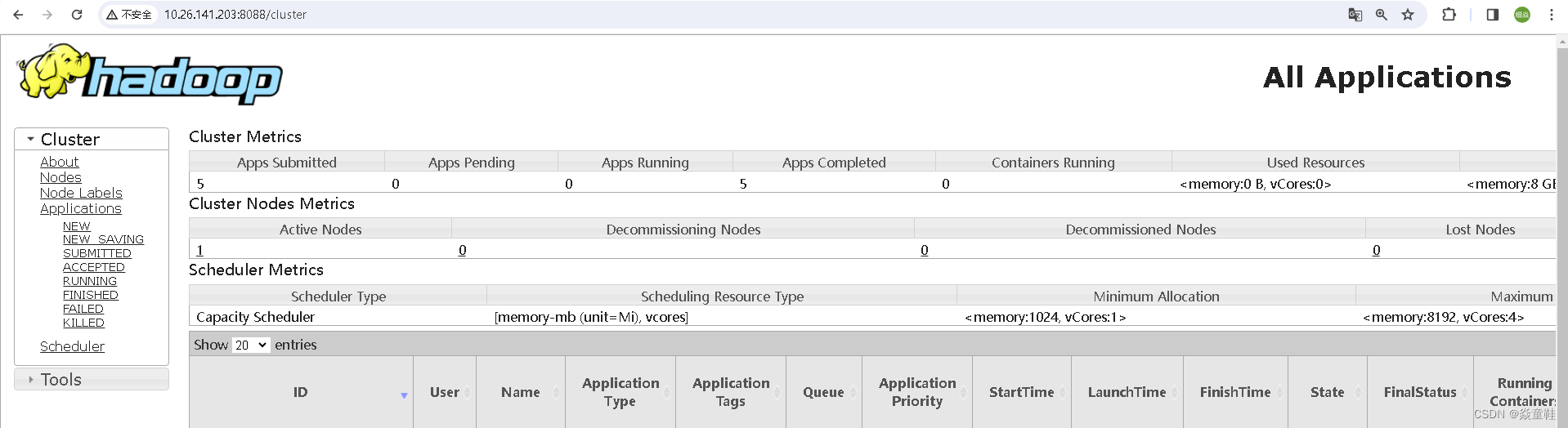

使用界面访问是否启动成功:

使用application运行flink任务并提交到yarn上如下:

bin/flink run-application -t yarn-application -c com.dxy.learn.demo.SocketStreamWordCount FlinkLearn-1.0-SNAPSHOT.jar

更多【flink-flink学习之旅(二)】相关视频教程:www.yxfzedu.com

相关文章推荐

- 编辑器-Linux编辑器:vim的简单介绍及使用 - 其他

- 编辑器-将 ONLYOFFICE 文档编辑器与 С# 群件平台集成 - 其他

- 媒体-行业洞察:分布式云如何助力媒体与娱乐业实现创新与增长? - 其他

- 编辑器-wpf devexpress设置行和编辑器 - 其他

- 媒体-海外媒体发稿:彭博社发稿宣传中,5种精准营销方式 - 其他

- 编辑器-广州华锐互动:VR互动实训内容编辑器助力教育创新升级 - 其他

- hadoop-WPF ToggleButton 主题切换动画按钮 - 其他

- python-pytorch-gpu(Anaconda3+cuda+cudnn) - 其他

- gaussdb-大数据-之LibrA数据库系统告警处理(ALM-12039 GaussDB主备数据不同步) - 其他

- hive-大数据毕业设计选题推荐-智慧消防大数据平台-Hadoop-Spark-Hive - 其他

- python-【PyTorch教程】如何使用PyTorch分布式并行模块DistributedDataParallel(DDP)进行多卡训练 - 其他

- 编辑器-Linux编辑器---vim的使用 - 其他

- 矩阵-软文推广中媒体矩阵的优势在哪儿 - 其他

- 运维-CentOS 中启动 Jar 包 - 其他

- c#-C#中Linq AsEnumeralbe、DefaultEmpty和Empty的使用 - 其他

- jvm-JVM关键指标监控(调优) - 其他

- 编程技术-JavaScript + setInterval实现简易数据轮播效果 - 其他

- 编程技术-Datawhale智能汽车AI挑战赛 - 其他

- 编程技术-针对CSP-J/S的每日一练:Day 6 - 其他

- 编程技术-【vue实战项目】通用管理系统:api封装、404页 - 其他

2):严禁色情、血腥、暴力

3):严禁发布任何形式的广告贴

4):严禁发表关于中国的政治类话题

5):严格遵守中国互联网法律法规

6):有侵权,疑问可发邮件至service@yxfzedu.com