spark-大数据-Spark-关于Json数据格式的数据的处理与练习

推荐 原创上一篇:

大数据-MapReduce-关于Json数据格式的数据的处理与练习-CSDN博客

16.7 Json在Spark中的引用

依旧利用上篇的数据去获取每部电影的平均分

{"mid":1,"rate":6,"uid":"u001","ts":15632433243}

{"mid":1,"rate":4,"uid":"u002","ts":15632433263}

{"mid":1,"rate":5,"uid":"u003","ts":15632403263}

{"mid":1,"rate":3,"uid":"u004","ts":15632403963}

{"mid":1,"rate":4,"uid":"u004","ts":15632403963}

{"mid":2,"rate":5,"uid":"u001","ts":15632433243}

{"mid":2,"rate":4,"uid":"u002","ts":15632433263}

{"mid":2,"rate":5,"uid":"u003","ts":15632403263}

{"mid":2,"rate":3,"uid":"u005","ts":15632403963}

{"mid":2,"rate":7,"uid":"u005","ts":15632403963}

{"mid":2,"rate":6,"uid":"u005","ts":15632403963}

{"mid":3,"rate":2,"uid":"u001","ts":15632433243}

{"mid":3,"rate":1,"uid":"u002","ts":15632433263}

{"mid":3,"rate":3,"uid":"u005","ts":15632403963}

{"mid":3,"rate":8,"uid":"u005","ts":15632403963}

{"mid":3,"rate":7,"uid":"u005","ts":15632403963}Spark代码

/**

* Test02.scala

*

* Scala code for calculating the average rating of each movie.

*/

package com.doit.day0130

import com.doit.day0126.Movie

import com.alibaba.fastjson.JSON

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object Test02 {

def main(args: Array[String]): Unit = {

// 创建SparkConf对象,并设置应用程序名称和运行模式

val conf = new SparkConf()

.setAppName("Starting...")

.setMaster("local[*]")

// 创建SparkContext对象,并传入SparkConf对象

val sc = new SparkContext(conf)

// 读取数据文件"movie.json",并将其转换为RDD

val rdd1 = sc.textFile("data/movie.json")

// 将RDD中的每一行转换为Movie对象,并形成新的RDD

val rdd2: RDD[Movie] = rdd1.map(line => {

// 使用JSON解析器将每一行转换为Movie对象

val mv = JSON.parseObject(line, classOf[Movie])

mv

})

// 对RDD进行分组操作,以电影ID作为分组依据

val rdd3: RDD[(Int, Iterable[Movie])] = rdd2.groupBy(_.mid)

// 计算每个电影的评分总和和数量,并计算平均评分

val rdd4 = rdd3.map(tp => {

// 获取电影ID

val mid = tp._1

// 计算评分总和

val sum = tp._2.map(_.rate).sum

// 计算电影数量

val size = tp._2.size

// 计算平均评分

(mid, 1.0 * sum / size)

})

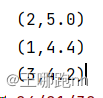

// 打印出每部电影的平均评分

rdd4.foreach(println)

}

}

更多【spark-大数据-Spark-关于Json数据格式的数据的处理与练习】相关视频教程:www.yxfzedu.com

相关文章推荐

- stable diffusion-AI 绘画 | Stable Diffusion 高清修复、细节优化 - 其他

- spring-SpringBoot中的桥接模式 - 其他

- 编程技术-Halcon WPF 开发学习笔记(2):Halcon导出c#脚本 - 其他

- mysql-【MySQL】视图 - 其他

- 编程技术-1994-2021年分行业二氧化碳排放量数据 - 其他

- 编程技术-SFTP远程终端访问 - 其他

- spring-【Spring】SpringBoot配置文件 - 其他

- 算法-“目标值排列匹配“和“背包组合问题“的区别和leetcode例题详解 - 其他

- java-一个轻量级 Java 权限认证框架——Sa-Token - 其他

- 运维-RK3568平台 查看内存的基本命令 - 其他

- 算法-深入理解强化学习——多臂赌博机:梯度赌博机算法的数学证明 - 其他

- apache-Apache Doris 是什么 - 其他

- list-Redis数据结构七之listpack和quicklist - 其他

- css-HTML CSS JS 画的效果图 - 其他

- python-Win10系统下torch.cuda.is_available()返回为False的问题解决 - 其他

- python-定义无向加权图,并使用Pytorch_geometric实现图卷积 - 其他

- git-如何使用git-credentials来管理git账号 - 其他

- prompt-Anaconda Powershell Prompt和Anaconda Prompt的区别 - 其他

- python-Dhtmlx Event Calendar 付费版使用 - 其他

- gpt-ChatGPT、GPT-4 Turbo接口调用 - 其他

记录自己的技术轨迹

文章规则:

1):文章标题请尽量与文章内容相符

2):严禁色情、血腥、暴力

3):严禁发布任何形式的广告贴

4):严禁发表关于中国的政治类话题

5):严格遵守中国互联网法律法规

6):有侵权,疑问可发邮件至service@yxfzedu.com